Constrained minimization problems

Contents

Constrained minimization problems#

Considering the general optimization problem again

with optimization variable \(x \in \mathbb{R}^{n}\) and twice continuously differentiable objective and constraint functions \(f, g_{i}, h_{j} \in C^{2}(\mathbb{R}^{n}, \mathbb{R})\).

The set of feasible points

with \(g := (g_{1}, \ldots, g_{m})^{T}\), \(h := (h_{1}, \ldots, h_{p})^{T}\) is assumed to be not empty.

For a feasible point \(x \in X\) the set of active inequalities is denoted by

For a local minimum \(x^{*} \in X\) the active inequalities can be treated as equations, the inactive ones don’t matter.

The Lagrangian#

The key idea is to augment the objective function \(f(x)\) with a weighted sum of the constraint functions.

The Lagrangian \(L \colon \mathbb{R}^{n} \times \mathbb{R}^{m} \times \mathbb{R}^{p} \to \mathbb{R}\) to the optimization problem (1) is defined as:

The Lagrange multipliers \(\lambda := (\lambda_{1}, \ldots, \lambda_{m})^{T}\) and \(\mu := (\mu_{1}, \ldots, \mu_{p})^{T}\) are associated with the \(i\)-th and \(j\)-th constraint function, respectively.

KKT optimality conditions#

Having the Lagrangian defined, similar to the unconstrained optimization problem, first- and higher-order optimality conditions can be defined.

The first-order conditions are called Karush–Kuhn–Tucker (KKT) optimality conditions.

Theorem 9: KKT optimality conditions

For a local minimum \(x^{*}\) of the optimization problem (1) let the gradients

be linear independent (LICQ = Linear independence constraint qualification).

Then there exist unique Lagrange multipliers \(\lambda^{*} \in \mathbb{R}^{m}\) and \(\mu^{*} \in \mathbb{R}^{p}\), such that for the Lagrangian

the following necessary conditions hold:

(1) Stationarity

(2) Complementary slackness

(3) Primal feasibility

(4) Dual feasibility

Points \((x^{*}, \lambda^{*}, \mu^{*})\) fulfilling the KKT optimality conditions are referred to as KKT-points.

Study the exercises RM01, RM02, and RM03 to apply the KKT optimality conditions.

For a proof and more detailed discussion on the KKT optimality conditions, see [BV04] (chapter 5) and [LY16] (chapter 11).

Summarizing key ideas:

The KKT optimality conditions help to find optimal solutions for constraint optimization problems by defining a non-linear system of equations to solve.

\[\begin{split} \begin{aligned} \nabla_{x} L &= 0 \\ \nabla_{\lambda} L &= 0 \\ \nabla_{\mu} L &= 0 \\ \end{aligned} \end{split}\]Many solution algorithms for constraint optimization problems solve the KKT optimality conditions numerically.

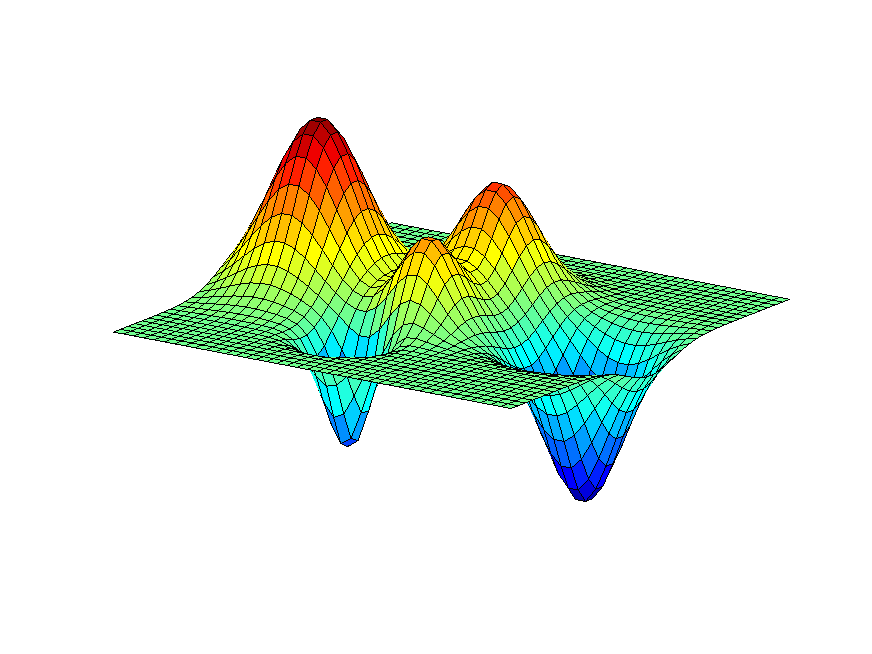

KKT-points \((x^{*}, \lambda^{*})\) are saddle-points of the Lagrangian.

Approach to find a supporting hyperplane on the feasible set of inequality constraints

\[ \left\{ x \in X \colon\; g_{i}(x) \leq 0, \quad i = 1, \ldots ,m \right\}. \]The proof of the KKT optimality conditions makes use of the hyperplane separation theorem.

From the Lagrangian and the KKT optimality conditions to each optimization problem (1) a convex dual optimization problem can be formulated. See [BV04] (chapter 5).